blogs

Capturing a biosignal is only the beginning. The real challenge starts once those tiny electrical fluctuations from your brain, heart, or muscles are recorded. What do they mean? How do we clean, interpret, and translate them into something both the machine and eventually we can understand? In this blog, we move beyond sensors to the invisible layer of algorithms and analysis that turns raw biosignal data into insight. From filtering and feature extraction to machine learning and real-time interpretation, this is how your body’s electrical language becomes readable.

Every heartbeat, every blink, every neural spark produces a complex trace of electrical or mechanical activity. These traces known collectively as biosignals are the raw currency of human-body intelligence. But in their raw form they’re noisy, dynamic, and difficult to interpret.

The transformation from raw sensor output to interpreted understanding is what we call biosignal processing. It’s the foundation of modern neuro- and bio-technology, enabling everything from wearable health devices to brain-computer interfaces (BCIs).

The Journey: From Raw Signal to Insight

When a biosignal sensor records, it captures a continuous stream of data—voltage fluctuations (in EEG, ECG, EMG), optical intensity changes, or pressure variations.

But that stream is messy. It includes baseline drift, motion artefacts, impedance shifts as electrodes dry, physiological artefacts (eye blinks, swallowing, jaw tension), and environmental noise (mains hum, electromagnetic interference).

Processing converts this noise-ridden stream into usable information, brain rhythms, cardiac cycles, muscle commands, or stress patterns.

Stage 1: Pre-processing — Cleaning the Signal

Before we can make sense of the body’s signals, we must remove the noise.

- Filtering: Band-pass filters (typically 0.5–45 Hz for EEG) remove slow drift and high-frequency interference; notch filters suppress 50/60 Hz mains hum.

- Artifact removal: Independent Component Analysis (ICA) and regression remain the most common methods for removing eye-blink (EOG) and muscle (EMG) artefacts, though hybrid and deep learning–based techniques are becoming more popular for automated denoising.

- Segmentation / epoching: Continuous biosignals are divided into stable time segments—beat-based for ECG or fixed/event-locked windows for EEG (e.g., 250 ms–1 s)—to capture temporal and spectral features more reliably.

- Normalization & baseline correction: Normalization rescales signal amplitudes across channels or subjects, while baseline correction removes constant offsets or drift to align signals to a common reference.

Think of this stage as cleaning a lens: if you don’t remove the smudges, everything you see through it will be distorted.

Stage 2: Feature Extraction — Finding the Patterns

Once the signal is clean, we quantify its characteristics, features that encode physiological or cognitive states.

Physiological Grounding

- EEG: Arises from synchronized postsynaptic currents in cortical pyramidal neurons.

- EMG: Records summed action potentials from contracting muscle fibers.

- ECG: Reflects rhythmic depolarization of cardiac pacemaker (SA node) cells.

Time-domain Features

Mean, variance, RMS, and zero-crossing rate quantify signal amplitude and variability over time. In EMG, Mean Absolute Value (MAV) and Waveform Length (WL) reflect overall muscle activation and fatigue progression.

Frequency & Spectral Features

The power of each EEG band tends to vary systematically across mental states.

Time–Frequency & Non-Linear Features

Wavelet transforms or Empirical Mode Decomposition capture transient events. Entropy- and fractal-based measures reveal complexity, useful for fatigue or cognitive-load studies.

Spatial Features

For multi-channel EEG, spatial filters such as Common Spatial Patterns (CSP) isolate task-specific cortical sources.

Stage 3: Classification & Machine Learning — Teaching Machines to Read the Body

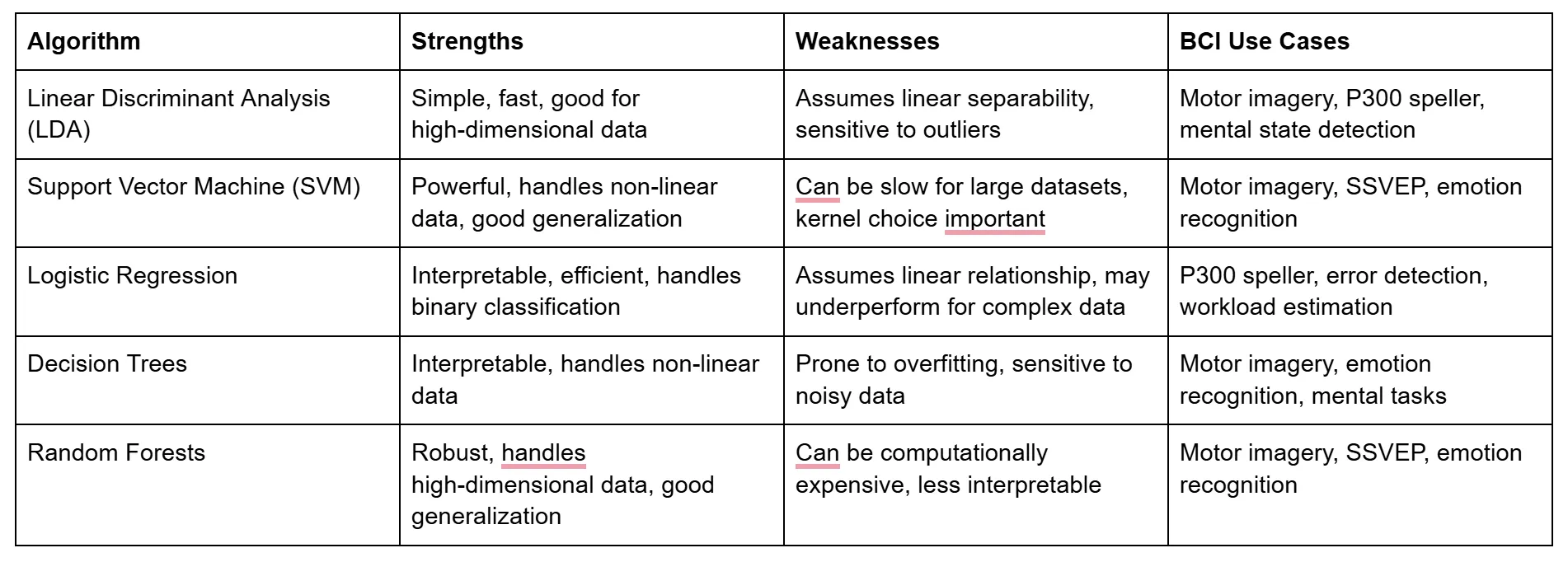

After feature extraction, machine-learning models map those features to outcomes: focused vs fatigued, gesture A vs gesture B, normal vs arrhythmic.

- Classical ML: SVM, LDA, Random Forest , effective for curated features.

- Deep Learning: CNNs, LSTMs, Graph CNNs , learn directly from raw or minimally processed data.

- Transfer Learning: Improves cross-subject performance by adapting pretrained networks.

- Edge Inference: Deploying compact models (TinyML, quantized CNNs) on embedded hardware to achieve < 10 ms latency.

This is where raw physiology becomes actionable intelligence.

Interpreting Results — Making Sense of the Numbers

A robust pipeline delivers meaning, not just data:

- Detecting stress or fatigue for adaptive feedback.

- Translating EEG patterns into commands for prosthetics or interfaces.

- Monitoring ECG spectral shifts to flag early arrhythmias.

- Quantifying EMG coordination for rehabilitation or athletic optimization.

Performance hinges on accuracy, latency, robustness, and interpretability, especially when outcomes influence safety-critical systems.

Challenges and Future Directions

Technical: Inter-subject variability, electrode drift, real-world noise, and limited labeled datasets still constrain accuracy.

Ethical / Explainability: As algorithms mediate more decisions, transparency and consent are non-negotiable.

Multimodal Fusion: Combining EEG + EMG + ECG data improves reliability but raises synchronization and power-processing challenges.

Edge AI & Context Awareness: The next frontier is continuous, low-latency interpretation that adapts to user state and environment in real time.

Final Thought

Capturing a biosignal is only half the story. What truly powers next-gen neurotech and human-aware systems is turning that signal into sense. From electrodes and photodiodes to filters and neural nets, each link in this chain brings us closer to devices that don’t just measure humans; they understand them.

Every thought, heartbeat, and muscle twitch leaves behind a signal, but how do we actually capture them? In this blog post, we explore the sensors that make biosignal measurement possible, from EEG and ECG electrodes to optical and biochemical interfaces, and what it takes to turn those signals into meaningful data.

When we think of sensors, we often imagine cameras, microphones, or temperature gauges. But some of the most fascinating sensors aren’t designed to measure the world, they’re designed to measure you.

These are biosignal sensors: tiny, precise, and increasingly powerful tools that decode the electrical whispers of your brain, heart, and muscles. They're the hidden layer enabling brain-computer interfaces, wearables, neurofeedback systems, and next-gen health diagnostics.

But how do they actually work? And what makes one sensor better than another?

Let’s break it down, from scalp to circuit board.

First, a Quick Recap: What Are Biosignals?

Biosignals are the body’s internal signals, electrical, optical, or chemical , that reflect brain activity, heart function, muscle movement, and more. If you’ve read our earlier post on biosignal types, you’ll know they’re the raw material for everything from brain-computer interfaces to biometric wearables.

In this blog, we shift focus to the devices and sensors that make it possible to detect these signals in the real world, and what it takes to do it well.

The Devices That Listen In: Biosignal Sensor Types

.webp)

A Closer Look: How These Sensors Work

1. EEG / ECG / EMG – Electrical Sensors

These measure voltage fluctuations at the skin surface, caused by underlying bioelectric activity.

It’s like trying to hear a whisper in a thunderstorm; brain and muscle signals are tiny, and will get buried under noise unless the electrodes make solid contact and the amplifier filters aggressively.

There are two key electrode types:

- Wet electrodes: Use conductive gel or Saline for better signal quality. Still the gold standard in labs.

- Dry electrodes: More practical for wearables but prone to motion artifacts and noise (due to higher electrode resistance).

Signal acquisition often involves differential recording and requires high common-mode rejection ratios (CMRR) to suppress environmental noise.

Fun Fact: Even blinking your eyes generates an EMG signal that can overwhelm EEG data. That’s why artifact rejection algorithms are critical in EEG-based systems.

2. Optical Sensors (PPG, fNIRS)

These use light to infer blood flow or oxygenation levels:

- PPG: Emits light into the skin and measures reflection, pulsatile blood flow alters absorption.

- fNIRS: Uses near-infrared light to differentiate oxygenated vs. deoxygenated hemoglobin in the cortex.

Example: Emerging wearable fNIRS systems like Kernel Flow and OpenBCI Galea are making brain oxygenation measurement accessible outside labs.

3. Galvanic Skin Response / EDA – Emotion’s Electrical Signature

GSR (also called electrodermal activity) sensors detect subtle changes in skin conductance caused by sweat gland activity, a direct output of sympathetic nervous system arousal. When you're stressed or emotionally engaged, your skin becomes more conductive, and GSR sensors pick that up.

These sensors apply a small voltage across two points on the skin and track resistance over time. They're widely used in emotion tracking, stress monitoring, and psychological research due to their simplicity and responsiveness.

Together, these sensors form the foundation of modern biosignal acquisition — but capturing clean signals isn’t just about what you use, it’s about how you use it.

How Signal Quality Is Preserved

Measurement is just step one; capturing clean, interpretable signals involves:

- Analog Front End (AFE): Amplifies low signals while rejecting noise.

- ADC: Converts continuous analog signals into digital data.

- Signal Conditioning: Filters out drift, DC offset, 50/60Hz noise.

- Artifact Removal: Eye blinks, jaw clenches, muscle twitches.

Hardware platforms like TI’s ADS1299 and Analog Devices’ MAX30003 are commonly used in EEG and ECG acquisition systems.

New Frontiers in Biosignal Measurement

- Textile Sensors: Smart clothing with embedded electrodes for long-term monitoring.

- Biochemical Sensors: Detect metabolites like lactate, glucose, or cortisol in sweat or saliva.

- Multimodal Systems: Combining EEG + EMG + IMU + PPG in unified setups to boost accuracy.

A recent study involving transradial amputees demonstrated that combining EEG and EMG signals via a transfer learning model increased classification accuracy by 2.5–4.3% compared to EEG-only models.

Other multimodal fusion approaches, such as combining EMG and force myography (FMG), have shown classification improvements of over 10% compared to EMG alone.

Why Should You Care?

Because how we measure determines what we understand, and what we can build.

Whether it's a mental wellness wearable, a prosthetic limb that responds to thought, or a personalized neurofeedback app, it all begins with signal integrity. Bad data means bad decisions. Good signals? They unlock new frontiers.

Final Thought

We’re entering an era where technology doesn’t just respond to clicks, it responds to cognition, physiology, and intent.

Biosignal sensors are the bridge. Understanding them isn’t just for engineers; it’s essential for anyone shaping the future of human-aware tech.

In our previous blog, we explored how biosignals serve as the body's internal language—electrical, mechanical, and chemical messages that allow us to understand and interface with our physiology. Among these, electrical biosignals are particularly important for understanding how our nervous system, muscles, and heart function in real time. In this article, we’ll take a closer look at three of the most widely used electrical biosignals—EEG, ECG, and EMG—and their growing role in neurotechnology, diagnostics, performance tracking, and human-computer interaction. If you're new to the concept of biosignals, you might want to check out our introductory blog for a foundational overview.

"The body is a machine, and we must understand its currents if we are to understand its functions."-Émil du Bois-Reymond, pioneer in electrophysiology.

Life, though rare in the universe, leaves behind unmistakable footprints—biosignals. These signals not only confirm the presence of life but also narrate what a living being is doing, feeling, or thinking. As technology advances, we are learning to listen to these whispers of biology. Whether it’s improving health, enhancing performance, or building Brain-Computer Interfaces (BCIs), understanding biosignals is key.

Among the most studied biosignals are:

- Electroencephalogram (EEG) – from the brain

- Electrocardiogram (ECG) – from the heart

- Electromyogram (EMG) – from muscles

- Galvanic Skin Response (GSR) – from skin conductance

These signals are foundational for biosignal processing, real-time monitoring, and interfacing the human body with machines. In this article we look at some of these biosignals and some fascinating stories behind them.

Electroencephalography (EEG): Listening to Brainwaves

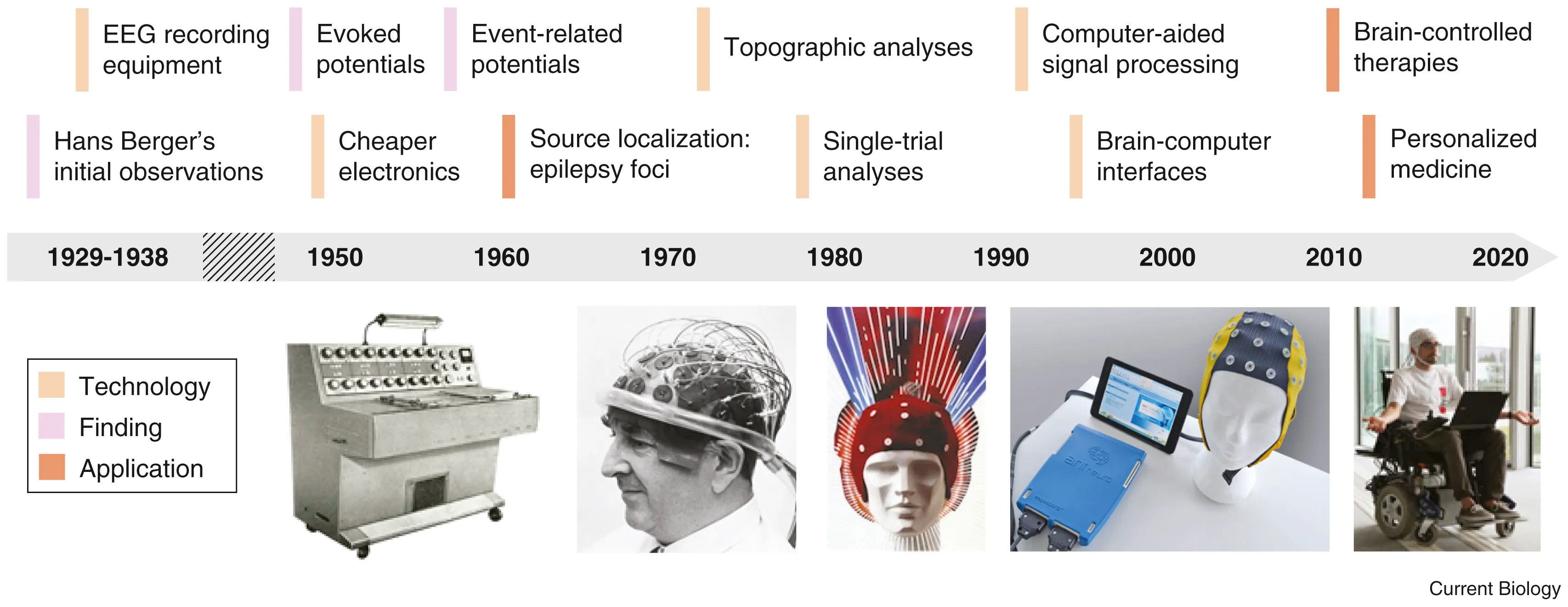

In 1893, a 19 year old Hans Berger fell from a horse and had a near death experience. Little did he know that it would be a pivotal moment in the history of neurotechnology. The same day he received a telegram from his sister who was extremely concerned for him because she had a bad feeling. Hans Berger was convinced that this was due to the phenomenon of telepathy. After all, it was the age of radio waves, so why can’t there be “brain waves”? In his ensuing 30 year career telepathy was not established but in his pursuit, Berger became the first person to record brain waves.

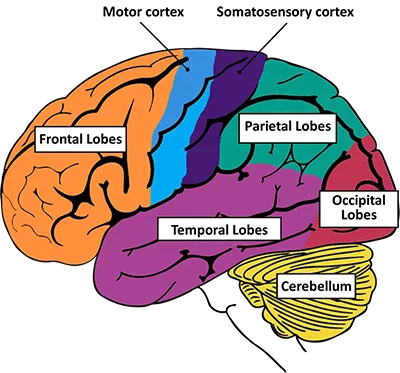

When neurons fire together, they generate tiny electrical currents. These can be recorded using electrodes placed on the scalp (EEG), inside the skull (intracranial EEG), or directly on the brain (ElectroCorticogram). EEG signal processing is used not only to understand the brain’s rhythms but also in EEG-based BCI systems, allowing communication and control for people with paralysis. Event-Related Potentials (ERPs) and Local Field Potentials (LFPs) are specialized types of EEG signals that provide insights into how the brain responds to specific stimuli.

Electrocardiogram (ECG): The Rhythm of the Heart

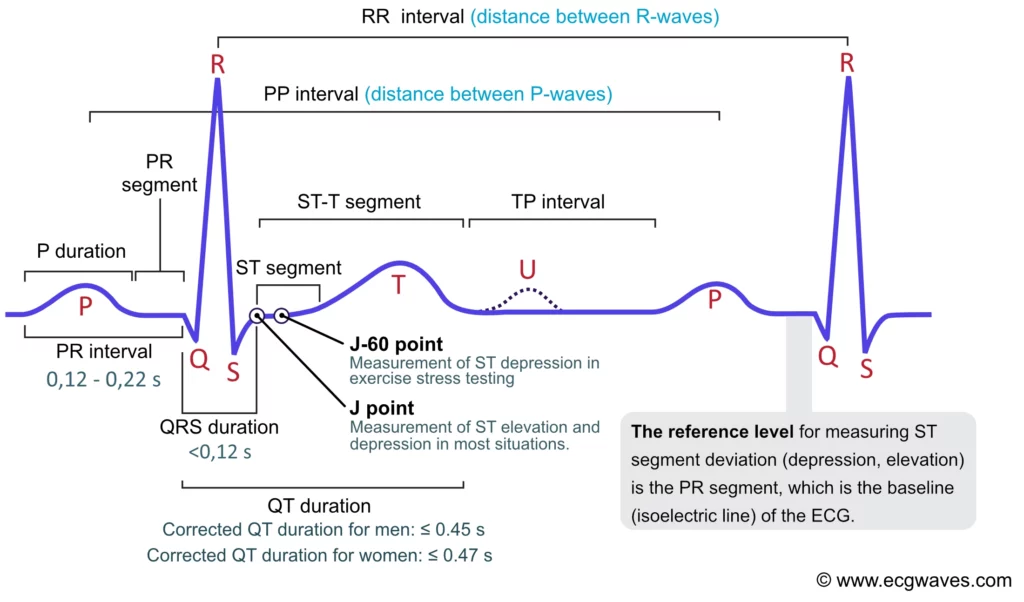

The heart has its own internal clock which produces tiny electrical signals every time it beats. Each heartbeat starts with a small electrical impulse made by a special part of the heart called the sinoatrial (SA) node. This impulse spreads through the heart muscle and makes it contract, first the upper (atria) and then lower chambers (ventricles) – that’s what pumps blood. This process produces voltage changes, which can be recorded via electrodes on the skin.

This gives rise to the classic PQRST waveform, with each component representing a specific part of the heart’s cycle. Modern wearables and medical devices use ECG signal analysis to monitor heart health in real time.

Fun fact: The waveform starts with “P” because Willem Einthoven left room for earlier letters—just in case future scientists discovered pre-P waves! So, thanks to a cautious scientist, we have the quirky naming system we still follow today.

Electromyography (EMG): The Language of Movement

When we perform any kind of movement - lifting our arm, kicking our leg, smiling, blinking or even breathing- our brain sends electrical signals to our muscles telling them to contract. When these neurons, known as motor neurons fire they release electrical impulses that travel to the muscle, causing it to contract. This electrical impulse—called a motor unit action potential (MUAP)—is what we see as an EMG signal. So, every time we move, we are generating an EMG signal!

Medical Applications

Medically, EMG is used for monitoring muscle fatigue especially in rehabilitation settings and muscle recovery post-injury or surgery. This helps clinicians measure progress and optimize therapy. EMG can distinguish between voluntary and involuntary movements, making it useful in diagnosing neuromuscular disorders, assessing stroke recovery, spinal cord injuries, and motor control dysfunctions.

Performance and Sports Science

In sports science, EMG can tell us muscle-activation timing and quantify force output of muscle groups. These are important factors to measure performance improvement in any sport. The number of motor units recruited and the synergy between muscle groups, helps us capture “mind-muscle connection” and muscle memory. Such things which were previously spoken off in a figurative manner can be scientifically measured and quantified using EMG. By tracking these parameters we get a window into movement efficiency and athletic performance. EMG is also used for biofeedback training, enabling individuals to consciously correct poor movement habits or retrain specific muscles

Beyond medicine and sports, EMG is used for gesture recognition in AR/VR and gaming, silent speech detection via facial EMG, and next-gen prosthetics and wearable exosuits that respond to the user’s muscle signals. EMG can be used in brain-computer interfaces (BCIs), helping paralyzed individuals control digital devices or communicate through subtle muscle activity. EMG bridges the gap between physiology, behavior, and technology—making it a critical tool in healthcare, performance optimization, and human-machine interaction.

As biosignal processing becomes more refined and neurotech devices more accessible, we are moving toward a world where our body speaks—and machines understand. Whether it’s detecting the subtlest brainwaves, tracking a racing heart, or interpreting muscle commands, biosignals are becoming the foundation of the next digital revolution. One where technology doesn’t just respond, but understands.

The human body is constantly generating data—electrical impulses, chemical fluctuations, and mechanical movements—that provide deep insights into our bodily functions, and cognitive states. These measurable physiological signals, known as biosignals, serve as the body's natural language, allowing us to interpret and interact with its inner workings. From monitoring brain activity to assessing muscle movement, biosignals are fundamental to understanding human physiology and expanding the frontiers of human-machine interaction. But what exactly are biosignals? How are they classified, and why do they matter? In this blog, we will explore the different types of biosignals, the science behind their measurement, and the role they play in shaping the future of human health and technology.

What are Biosignals?

Biosignals refer to any measurable signal originating from a biological system. These signals are captured and analyzed to provide meaningful information about the body's functions. Traditionally used in medicine for diagnosis and monitoring, biosignals are now at the forefront of research in neurotechnology, wearable health devices, and human augmentation.

The Evolution of Biosignal Analysis

For centuries, physicians have relied on pulse measurements to assess a person’s health. In ancient Chinese and Ayurvedic medicine, the rhythm, strength, and quality of the pulse were considered indicators of overall well-being. These early methods, while rudimentary, laid the foundation for modern biosignal monitoring.

Today, advancements in sensor technology, artificial intelligence, and data analytics have transformed biosignal analysis. Wearable devices can continuously track heart rate, brain activity, and oxygen levels with high precision. AI-driven algorithms can detect abnormalities in EEG or ECG signals, helping diagnose neurological and cardiac conditions faster than ever. Real-time biosignal monitoring is now integrated into medical, fitness, and neurotechnology applications, unlocking insights that were once beyond our reach.

This leap from manual pulse assessments to AI-powered biosensing is reshaping how we understand and interact with our own biology.

Types of Biosignals:-

Biosignals come in three main types

- Electrical Signals: Electrical signals are generated by neural and muscular activity, forming the foundation of many biosignal applications. Electroencephalography (EEG) captures brain activity, playing a crucial role in understanding cognition and diagnosing neurological disorders. Electromyography (EMG) measures muscle activity, aiding in rehabilitation and prosthetic control. Electrocardiography (ECG) records heart activity, making it indispensable for cardiovascular monitoring. Electrooculography (EOG) tracks eye movements, often used in vision research and fatigue detection.

- Mechanical Signals: Mechanical signals arise from bodily movements and structural changes, providing valuable physiological insights. Respiration rate tracks breathing patterns, essential for sleep studies and respiratory health. Blood pressure serves as a key indicator of cardiovascular health and stress responses. Muscle contractions help in analyzing movement disorders and biomechanics, enabling advancements in fields like sports science and physical therapy.

- Chemical Signals: Chemical signals reflect the biochemical activity within the body, offering a deeper understanding of physiological states. Neurotransmitters like dopamine and serotonin play a critical role in mood regulation and cognitive function. Hormone levels serve as indicators of stress, metabolism, and endocrine health. Blood oxygen levels are vital for assessing lung function and metabolic efficiency, frequently monitored in medical and athletic settings.

How Are Biosignals Measured?

After understanding what biosignals are and their different types, the next step is to explore how these signals are captured and analyzed. Measuring biosignals requires specialized sensors that detect physiological activity and convert it into interpretable data. This process involves signal acquisition, processing, and interpretation, enabling real-time monitoring and long-term health assessments.

- Electrodes & Wearable Sensors

Electrodes measure electrical biosignals like EEG (brain activity), ECG (heart activity), and EMG (muscle movement) by detecting small voltage changes. Wearable sensors, such as smartwatches, integrate these electrodes for continuous, non-invasive monitoring, making real-time health tracking widely accessible.

- Optical Sensors

Optical sensors, like pulse oximeters, use light absorption to measure blood oxygen levels (SpO₂) and assess cardiovascular and respiratory function. They are widely used in fitness tracking, sleep studies, and medical diagnostics.

- Pressure Sensors

These sensors measure mechanical biosignals such as blood pressure, respiratory rate, and muscle contractions by detecting force or air pressure changes. Blood pressure cuffs and smart textiles with micro-pressure sensors provide valuable real-time health data.

- Biochemical Assays

Biochemical sensors detect chemical biosignals like hormones, neurotransmitters, and metabolic markers. Advanced non-invasive biosensors can now analyze sweat composition, hydration levels, and electrolyte imbalances without requiring a blood sample.

- Advanced AI & Machine Learning in Biosignal Analysis

Artificial intelligence (AI) and machine learning (ML) have transformed biosignal interpretation by enhancing accuracy and efficiency. These technologies can detect abnormalities in EEG, ECG, and EMG signals, helping with early disease diagnosis. They also filter out noise and artifacts, improving signal clarity for more precise analysis. By analyzing long-term biosignal trends, AI can predict potential health risks and enable proactive interventions. Additionally, real-time AI-driven feedback is revolutionizing applications like neurofeedback and biofeedback therapy, allowing for more personalized and adaptive healthcare solutions. The integration of AI with biosignal measurement is paving the way for smarter diagnostics, personalized medicine, and enhanced human performance tracking.

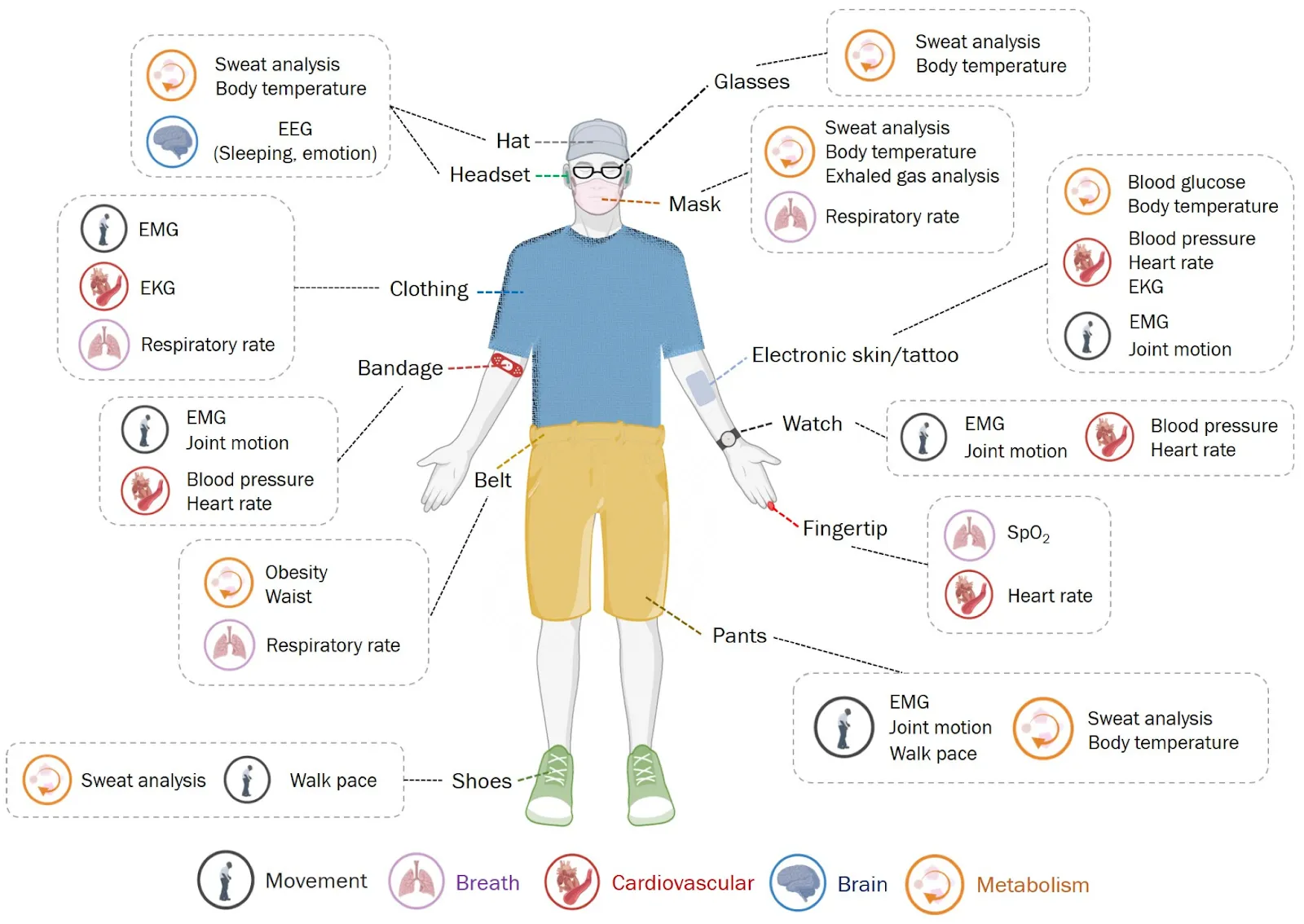

Figure : The image provides an overview of biosignals detectable from different parts of the human body and their corresponding wearable sensors. It categorizes biosignals such as EEG, ECG, and EMG, demonstrating how wearable technologies enable real-time health monitoring and improve diagnostic capabilities.

The Future of Biosignals

As sensor technology and artificial intelligence continue to evolve, biosignals will become even more integrated into daily life, shifting from reactive healthcare to proactive and predictive wellness solutions. Advances in non-invasive monitoring will allow for continuous tracking of vital biomarkers, reducing the need for clinical testing. Wearable biosensors will provide real-time insights into hydration, stress, and metabolic health, enabling individuals to make data-driven decisions about their well-being. Artificial intelligence will play a pivotal role in analyzing complex biosignal patterns, enabling early detection of diseases before symptoms arise and personalizing treatments based on an individual's physiological data.

The intersection of biosignals and brain-computer interfaces (BCIs) is also pushing the boundaries of human-machine interaction. EEG-based BCIs are already enabling users to control digital interfaces with their thoughts, and future developments could lead to seamless integration between the brain and external devices. Beyond healthcare, biosignals will drive innovations in adaptive learning, biometric authentication, and even entertainment, where music, lighting, and virtual experiences could respond to real-time physiological states. As these technologies advance, biosignals will not only help us understand the body better but also enhance human capabilities, bridging the gap between biology and technology in unprecedented ways.

Welcome back to our BCI crash course! Over the past eight blogs, we have explored the fascinating intersection of neuroscience, engineering, and machine learning, from the fundamental concepts of BCIs to the practical implementation of real-world applications. In this final installment, we will shift our focus to the future of BCI, delving into advanced topics and research directions that are pushing the boundaries of mind-controlled technology. Get ready to explore the exciting possibilities of hybrid BCIs, adaptive algorithms, ethical considerations, and the transformative potential that lies ahead for this groundbreaking field.

Hybrid BCIs: Combining Paradigms for Enhanced Performance

As we've explored in previous posts, different BCI paradigms leverage distinct brain signals and have their strengths and limitations. Motor imagery BCIs excel at decoding movement intentions, P300 spellers enable communication through attention-based selections, and SSVEP BCIs offer high-speed control using visual stimuli.

What are Hybrid BCIs? Synergy of Brain Signals

Hybrid BCIs combine multiple BCI paradigms, integrating different brain signals to create more robust, versatile, and user-friendly systems. Imagine a BCI that leverages both motor imagery and SSVEP to control a robotic arm with greater precision and flexibility, or a system that combines P300 with error-related potentials (ErrPs) to improve the accuracy and speed of a speller.

Benefits of Hybrid BCIs: Unlocking New Possibilities

Hybrid BCIs offer several advantages over single-paradigm systems:

- Improved Accuracy and Reliability: Combining complementary brain signals can enhance the signal-to-noise ratio and reduce the impact of individual variations in brain activity, leading to more accurate and reliable BCI control.

- Increased Flexibility and Adaptability: Hybrid BCIs can adapt to different user needs, tasks, and environments by dynamically switching between paradigms or combining them in a way that optimizes performance.

- Richer and More Natural Interactions: Integrating multiple BCI paradigms opens up possibilities for creating more intuitive and natural BCI interactions, allowing users to control devices with a greater range of mental commands.

Examples of Hybrid BCIs: Innovations in Action

Research is exploring various hybrid BCI approaches:

- Motor Imagery + SSVEP: Combining motor imagery with SSVEP can enhance the control of robotic arms. Motor imagery provides continuous control signals for movement direction, while SSVEP enables discrete selections for grasping or releasing objects.

- P300 + ErrP: Integrating P300 with ErrPs, brain signals that occur when we make errors, can improve speller accuracy. The P300 is used to select letters, while ErrPs can be used to automatically correct errors, reducing the need for manual backspacing.

Adaptive BCIs: Learning and Evolving with the User

One of the biggest challenges in BCI development is the inherent variability in brain signals. A BCI system that works perfectly for one user might perform poorly for another, and even a single user's brain activity can change over time due to factors like learning, fatigue, or changes in attention. This is where adaptive BCIs come into play, offering a dynamic and personalized approach to brain-computer interaction.

The Need for Adaptation: Embracing the Brain's Dynamic Nature

BCI systems need to adapt to several factors:

- Changes in User Brain Activity: Brain signals are not static. They evolve as users learn to control the BCI, become fatigued, or shift their attention. An adaptive BCI can track these changes and adjust its processing accordingly.

- Variations in Signal Quality and Noise: EEG recordings can be affected by various sources of noise, from muscle artifacts to environmental interference. An adaptive BCI can adjust its filtering and artifact rejection parameters to maintain optimal signal quality.

- Different User Preferences and Skill Levels: BCI users have different preferences for control strategies, feedback modalities, and interaction speeds. An adaptive BCI can personalize its settings to match each user's individual needs and skill level.

Methods for Adaptation: Tailoring BCIs to the Individual

Various techniques can be employed to create adaptive BCIs:

- Machine Learning Adaptation: Machine learning algorithms, such as those used for classification, can be trained to continuously learn and update the BCI model based on the user's brain data. This allows the BCI to adapt to changes in brain patterns over time and improve its accuracy and responsiveness.

- User Feedback Adaptation: BCIs can incorporate user feedback, either explicitly (through direct input) or implicitly (by monitoring performance and user behavior), to adjust parameters and optimize the interaction. For example, if a user consistently struggles to control a motor imagery BCI, the system could adjust the classification thresholds or provide more frequent feedback to assist them.

Benefits of Adaptive BCIs: A Personalized and Evolving Experience

Adaptive BCIs offer significant advantages:

- Enhanced Usability and User Experience: By adapting to individual needs and preferences, adaptive BCIs can become more intuitive and easier to use, reducing user frustration and improving the overall experience.

- Improved Long-Term Performance and Reliability: Adaptive BCIs can maintain high levels of performance and reliability over time by adjusting to changes in brain activity and signal quality.

- Personalized BCIs: Adaptive algorithms can tailor the BCI to each user's unique brain patterns, preferences, and abilities, creating a truly personalized experience.

Ethical Considerations: Navigating the Responsible Development of BCI

As BCI technology advances, it's crucial to consider the ethical implications of its development and use. BCIs have the potential to profoundly impact individuals and society, raising questions about privacy, autonomy, fairness, and responsibility.

Introduction: Ethics at the Forefront of BCI Innovation

Ethical considerations should be woven into the fabric of BCI research and development, guiding our decisions and ensuring that this powerful technology is used for good.

Key Ethical Concerns: Navigating a Complex Landscape

- Privacy and Data Security: BCIs collect sensitive brain data, raising concerns about privacy violations and potential misuse. Robust data security measures and clear ethical guidelines are crucial for protecting user privacy and ensuring responsible data handling.

- Agency and Autonomy: BCIs have the potential to influence user thoughts, emotions, and actions. It's essential to ensure that BCI use respects user autonomy and agency, avoiding coercion, manipulation, or unintended consequences.

- Bias and Fairness: BCI algorithms can inherit biases from the data they are trained on, potentially leading to unfair or discriminatory outcomes. Addressing these biases and developing fair and equitable BCI systems is essential for responsible innovation.

- Safety and Responsibility: As BCIs become more sophisticated and integrated into critical applications like healthcare and transportation, ensuring their safety and reliability is paramount. Clear lines of responsibility and accountability need to be established to mitigate potential risks and ensure ethical use.

Guidelines and Principles: A Framework for Responsible BCI

Efforts are underway to establish ethical guidelines and principles for BCI research and development. These guidelines aim to promote responsible innovation, protect user rights, and ensure that BCI technology benefits society as a whole.

Current Challenges and Future Prospects: The Road Ahead for BCI

While BCI technology has made remarkable progress, several challenges remain to be addressed before it can fully realize its transformative potential. However, the future of BCI is bright, with exciting possibilities on the horizon for enhancing human capabilities, restoring lost function, and improving lives.

Technical Challenges: Overcoming Roadblocks to Progress

- Signal Quality and Noise: Non-invasive BCIs, particularly those based on EEG, often suffer from low signal-to-noise ratios. Improving signal quality through advanced electrode designs, noise reduction algorithms, and a better understanding of brain signals is crucial for enhancing BCI accuracy and reliability.

- Robustness and Generalizability: Current BCI systems often work well in controlled laboratory settings but struggle to perform consistently across different users, environments, and tasks. Developing more robust and generalizable BCIs is essential for wider adoption and real-world applications.

- Long-Term Stability: Maintaining the long-term stability and performance of BCI systems, especially for implanted devices, is a significant challenge. Addressing issues like biocompatibility, signal degradation, and device longevity is crucial for ensuring the viability of invasive BCIs.

Future Directions: Expanding the BCI Horizon

- Non-invasive Advancements: Research is focusing on developing more sophisticated and user-friendly non-invasive BCI systems. Advancements in EEG technology, including dry electrodes, high-density arrays, and mobile brain imaging, hold promise for creating more portable, comfortable, and accurate non-invasive BCIs.

- Clinical Applications: BCIs are showing increasing promise for clinical applications, such as restoring lost motor function in individuals with paralysis, assisting in stroke rehabilitation, and treating neurological disorders like epilepsy and Parkinson's disease. Ongoing research and clinical trials are paving the way for wider adoption of BCIs in healthcare.

- Cognitive Enhancement: BCIs have the potential to enhance cognitive abilities, such as memory, attention, and learning. Research is exploring ways to use BCIs for cognitive training and to develop brain-computer interfaces that can augment human cognitive function.

- Brain-to-Brain Communication: One of the most futuristic and intriguing directions in BCI research is the possibility of direct brain-to-brain communication. Studies have already demonstrated the feasibility of transmitting simple signals between brains, opening up possibilities for collaborative problem-solving, enhanced empathy, and new forms of communication.

Resources for Further Learning and Development

- Brain-Computer Interface Wiki

- Research Journals and Conferences:some text

- Journal of Neural Engineering: https://iopscience.iop.org/journal/1741-2560 - A leading journal for BCI research and related fields.

- Brain-Computer Interfaces: https://www.tandfonline.com/toc/tbci20/current - A dedicated journal focusing on advances in BCI technology and applications.

Embracing the Transformative Power of BCI

From hybrid systems to adaptive algorithms, ethical considerations, and the exciting possibilities of the future, we've explored the cutting edge of BCI technology. This field is rapidly evolving, driven by advancements in neuroscience, engineering, and machine learning.

BCIs hold immense potential to revolutionize how we interact with technology, enhance human capabilities, restore lost function, and improve lives. As we continue to push the boundaries of mind-controlled technology, the future promises a world where our thoughts can seamlessly translate into actions, unlocking new possibilities for communication, control, and human potential.

As we wrap up this course with this final blog article, we hope that you gained an overview as well as practical expertise in the field of BCIs. Please feel free to reach out to us with feedback and areas of improvement. Thank you for reading along so far, and best wishes for further endeavors in your BCI journey!

Welcome back to our BCI crash course! We've journeyed from the fundamental concepts of BCIs to the intricacies of brain signals, mastered the art of signal processing, and learned how to train intelligent algorithms to decode those signals. Now, we're ready to tackle a fascinating and powerful BCI paradigm: motor imagery. Motor imagery BCIs allow users to control devices simply by imagining movements. This technology holds immense potential for applications like controlling neuroprosthetics for individuals with paralysis, assisting in stroke rehabilitation, and even creating immersive gaming experiences. In this post, we'll guide you through the step-by-step process of building a basic motor imagery BCI using Python, MNE-Python, and scikit-learn. Get ready to harness the power of your thoughts to interact with technology!

Understanding Motor Imagery: The Brain's Internal Rehearsal

Before we dive into building our BCI, let's first understand the fascinating phenomenon of motor imagery.

What is Motor Imagery? Moving Without Moving

Motor imagery is the mental rehearsal of a movement without actually performing the physical action. It's like playing a video of the movement in your mind's eye, engaging the same neural processes involved in actual execution but without sending the final commands to your muscles.

Neural Basis of Motor Imagery: The Brain's Shared Representations

Remarkably, motor imagery activates similar brain regions and neural networks as actual movement. The motor cortex, the area of the brain responsible for planning and executing movements, is particularly active during motor imagery. This shared neural representation suggests that imagining a movement is a powerful way to engage the brain's motor system, even without physical action.

EEG Correlates of Motor Imagery: Decoding Imagined Movements

Motor imagery produces characteristic changes in EEG signals, particularly over the motor cortex. Two key features are:

- Event-Related Desynchronization (ERD): A decrease in power in specific frequency bands (mu, 8-12 Hz, and beta, 13-30 Hz) over the motor cortex during motor imagery. This decrease reflects the activation of neural populations involved in planning and executing the imagined movement.

- Event-Related Synchronization (ERS): An increase in power in those frequency bands after the termination of motor imagery, as the brain returns to its resting state.

These EEG features provide the foundation for decoding motor imagery and building BCIs that can translate imagined movements into control signals.

Building a Motor Imagery BCI: A Step-by-Step Guide

Now that we understand the neural basis of motor imagery, let's roll up our sleeves and build a BCI that can decode these imagined movements. We'll follow a step-by-step process, using Python, MNE-Python, and scikit-learn to guide us.

1. Loading the Dataset

Choosing the Dataset: BCI Competition IV Dataset 2a

For this project, we'll use the BCI Competition IV dataset 2a, a publicly available EEG dataset specifically designed for motor imagery BCI research. This dataset offers several advantages:

- Standardized Paradigm: The dataset follows a well-defined experimental protocol, making it easy to understand and replicate. Participants were instructed to imagine moving their left or right hand, providing clear labels for our classification task.

- Multiple Subjects: It includes recordings from nine subjects, providing a decent sample size to train and evaluate our BCI model.

- Widely Used: This dataset has been extensively used in BCI research, allowing us to compare our results with established benchmarks and explore various analysis approaches.

You can download the dataset from the BCI Competition IV website (http://www.bbci.de/competition/iv/).

Loading the Data: MNE-Python to the Rescue

Once you have the dataset downloaded, you can load it using MNE-Python's convenient functions. Here's a code snippet to get you started:

import mne

# Set the path to the dataset directory

data_path = '<path_to_dataset_directory>'

# Load the raw EEG data for subject 1

raw = mne.io.read_raw_gdf(data_path + '/A01T.gdf', preload=True)

Replace <path_to_dataset_directory> with the actual path to the directory where you've stored the dataset files. This code loads the data for subject "A01" from the training session ("T").

2. Data Preprocessing: Preparing the Signals for Decoding

Raw EEG data is often noisy and contains artifacts that can interfere with our analysis. Preprocessing is crucial for cleaning up the data and isolating the relevant brain signals associated with motor imagery.

Channel Selection: Focusing on the Motor Cortex

Since motor imagery primarily activates the motor cortex, we'll select EEG channels that capture activity from this region. Key channels include:

- C3: Located over the left motor cortex, sensitive to right-hand motor imagery.

- C4: Located over the right motor cortex, sensitive to left-hand motor imagery.

- Cz: Located over the midline, often used as a reference or to capture general motor activity.

# Select the desired channels

channels = ['C3', 'C4', 'Cz']

# Create a new raw object with only the selected channels

raw_selected = raw.pick_channels(channels)

Filtering: Isolating Mu and Beta Rhythms

We'll apply a band-pass filter to isolate the mu (8-12 Hz) and beta (13-30 Hz) frequency bands, as these rhythms exhibit the most prominent ERD/ERS patterns during motor imagery.

# Apply a band-pass filter from 8 Hz to 30 Hz

raw_filtered = raw_selected.filter(l_freq=8, h_freq=30)

This filtering step removes irrelevant frequencies and enhances the signal-to-noise ratio for detecting motor imagery-related brain activity.

Artifact Removal: Enhancing Data Quality (Optional)

Depending on the dataset and the quality of the recordings, we might need to apply artifact removal techniques. Independent Component Analysis (ICA) is particularly useful for identifying and removing artifacts like eye blinks, muscle activity, and heartbeats, which can contaminate our motor imagery signals. MNE-Python provides functions for performing ICA and visualizing the components, allowing us to select and remove those associated with artifacts. This step can significantly improve the accuracy and reliability of our motor imagery BCI.

3. Epoching and Visualizing: Zooming in on Motor Imagery

Now that we've preprocessed our EEG data, let's create epochs around the motor imagery cues, allowing us to focus on the brain activity specifically related to those imagined movements.

Defining Epochs: Capturing the Mental Rehearsal

The BCI Competition IV dataset 2a includes event markers indicating the onset of the motor imagery cues. We'll use these markers to create epochs, typically spanning a time window from a second before the cue to several seconds after it. This window captures the ERD and ERS patterns associated with motor imagery.

# Define event IDs for left and right hand motor imagery (refer to dataset documentation)

event_id = {'left_hand': 1, 'right_hand': 2}

# Set the epoch time window

tmin = -1 # 1 second before the cue

tmax = 4 # 4 seconds after the cue

# Create epochs

epochs = mne.Epochs(raw_filtered, events, event_id, tmin, tmax, baseline=(-1, 0), preload=True)

Baseline Correction: Removing Pre-Imagery Bias

We'll apply baseline correction to remove any pre-existing bias in the EEG signal, ensuring that our analysis focuses on the changes specifically related to motor imagery.

Visualizing: Inspecting and Gaining Insights

- Plotting Epochs: Use epochs.plot() to visualize individual epochs, inspecting for artifacts and observing the general patterns of brain activity during motor imagery.

- Topographical Maps: Use epochs['left_hand'].average().plot_topomap() and epochs['right_hand'].average().plot_topomap() to visualize the scalp distribution of mu and beta power changes during left and right hand motor imagery. These maps can help validate our channel selection and confirm that the ERD patterns are localized over the expected motor cortex areas.

4. Feature Extraction with Common Spatial Patterns (CSP): Maximizing Class Differences

Common Spatial Patterns (CSP) is a spatial filtering technique specifically designed to extract features that best discriminate between two classes of EEG data. In our case, these classes are left-hand and right-hand motor imagery.

Understanding CSP: Finding Optimal Spatial Filters

CSP seeks to find spatial filters that maximize the variance of one class while minimizing the variance of the other. It achieves this by solving an eigenvalue problem based on the covariance matrices of the two classes. The resulting spatial filters project the EEG data onto a new space where the classes are more easily separable

.

Applying CSP: MNE-Python's CSP Function

MNE-Python's mne.decoding.CSP() function makes it easy to extract CSP features:

from mne.decoding import CSP

# Create a CSP object

csp = CSP(n_components=4, reg=None, log=True, norm_trace=False)

# Fit the CSP to the epochs data

csp.fit(epochs['left_hand'].get_data(), epochs['right_hand'].get_data())

# Transform the epochs data using the CSP filters

X_csp = csp.transform(epochs.get_data())

Interpreting CSP Filters: Mapping Brain Activity

The CSP spatial filters represent patterns of brain activity that differentiate between left and right hand motor imagery. By visualizing these filters, we can gain insights into the underlying neural sources involved in these imagined movements.

Selecting CSP Components: Balancing Performance and Complexity

The n_components parameter in the CSP() function determines the number of CSP components to extract. Choosing the optimal number of components is crucial for balancing classification performance and model complexity. Too few components might not capture enough information, while too many can lead to overfitting. Cross-validation can help us find the optimal balance.

5. Classification with a Linear SVM: Decoding Motor Imagery

Choosing the Classifier: Linear SVM for Simplicity and Efficiency

We'll use a linear Support Vector Machine (SVM) to classify our motor imagery data. Linear SVMs are well-suited for this task due to their simplicity, efficiency, and ability to handle high-dimensional data. They seek to find a hyperplane that best separates the two classes in the feature space.

Training the Model: Learning from Spatial Patterns

from sklearn.svm import SVC

# Create a linear SVM classifier

svm = SVC(kernel='linear')

# Train the SVM model

svm.fit(X_csp_train, y_train)

Hyperparameter Tuning: Optimizing for Peak Performance

SVMs have hyperparameters, like the regularization parameter C, that control the model's complexity and generalization ability. Hyperparameter tuning, using techniques like grid search or cross-validation, helps us find the optimal values for these parameters to maximize classification accuracy.

Evaluating the Motor Imagery BCI: Measuring Mind Control

We've built our motor imagery BCI, but how well does it actually work? Evaluating its performance is crucial for understanding its capabilities and limitations, especially if we envision real-world applications.

Cross-Validation: Assessing Generalizability

To obtain a reliable estimate of our BCI's performance, we'll employ k-fold cross-validation. This technique helps us assess how well our model generalizes to unseen data, providing a more realistic measure of its real-world performance.

from sklearn.model_selection import cross_val_score

# Perform 5-fold cross-validation

scores = cross_val_score(svm, X_csp, y, cv=5)

# Print the average accuracy across the folds

print("Average accuracy: %0.2f" % scores.mean())

Performance Metrics: Beyond Simple Accuracy

- Accuracy: While accuracy, the proportion of correctly classified instances, is a useful starting point, it doesn't tell the whole story. For imbalanced datasets (where one class has significantly more samples than the other), accuracy can be misleading.

- Kappa Coefficient: The Kappa coefficient (κ) measures the agreement between the classifier's predictions and the true labels, taking into account the possibility of chance agreement. A Kappa value of 1 indicates perfect agreement, while 0 indicates agreement equivalent to chance. Kappa is a more robust metric than accuracy, especially for imbalanced datasets.

- Information Transfer Rate (ITR): ITR quantifies the amount of information transmitted by the BCI per unit of time, considering both accuracy and the number of possible choices. A higher ITR indicates a faster and more efficient communication system.

- Sensitivity and Specificity: These metrics provide a more nuanced view of classification performance. Sensitivity measures the proportion of correctly classified positive instances (e.g., correctly identifying left-hand imagery), while specificity measures the proportion of correctly classified negative instances (e.g., correctly identifying right-hand imagery).

Practical Implications: From Benchmarks to Real-World Use

Evaluating a motor imagery BCI goes beyond just looking at numbers. We need to consider the practical implications of its performance:

- Minimum Accuracy Requirements: Real-world applications often have minimum accuracy thresholds. For example, a neuroprosthetic controlled by a motor imagery BCI might require an accuracy of over 90% to ensure safe and reliable operation.

- User Experience: Beyond accuracy, factors like speed, ease of use, and mental effort also contribute to the overall user experience.

Unlocking the Potential of Motor Imagery BCIs

We've successfully built a basic motor imagery BCI, witnessing the power of EEG, signal processing, and machine learning to decode movement intentions directly from brain signals. Motor imagery BCIs hold immense potential for a wide range of applications, offering new possibilities for individuals with disabilities, stroke rehabilitation, and even immersive gaming experiences.

Resources for Further Reading

- Review article: EEG-Based Brain-Computer Interfaces Using Motor-Imagery: Techniques and Challenges https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6471241/

- Review article: A review of critical challenges in MI-BCI: From conventional to deep learning methods https://www.sciencedirect.com/science/article/abs/pii/S016502702200262X

- BCI Competition IV Dataset 2a https://www.bbci.de/competition/iv/desc_2a.pdf

From Motor Imagery to Advanced BCI Paradigms

This concludes our exploration of building a motor imagery BCI. You've gained valuable insights into the neural basis of motor imagery, learned how to extract features using CSP, trained a classifier to decode movement intentions, and evaluated the performance of your BCI model.

In our final blog post, we'll explore the exciting frontier of advanced BCI paradigms and future directions. We'll delve into concepts like hybrid BCIs, adaptive algorithms, ethical considerations, and the ever-expanding possibilities that lie ahead in the world of brain-computer interfaces. Stay tuned for a glimpse into the future of mind-controlled technology!

Welcome back to our BCI crash course! We've explored the foundations of BCIs, delved into the intricacies of brain signals, mastered the art of signal processing, and learned how to train intelligent algorithms to decode those signals. Now, we are ready to put all this knowledge into action by building a real-world BCI application: a P300 speller. P300 spellers are a groundbreaking technology that allows individuals with severe motor impairments to communicate by simply focusing their attention on letters on a screen. By harnessing the power of the P300 event-related potential, a brain response elicited by rare or surprising stimuli, these spellers open up a world of communication possibilities for those who might otherwise struggle to express themselves. In this blog, we will guide you through the step-by-step process of building a P300 speller using Python, MNE-Python, and scikit-learn. Get ready for a hands-on adventure in BCI development as we translate brainwaves into letters and words!

Step-by-Step Implementation: A Hands-on BCI Project

1. Loading the Dataset

Introducing the BNCI Horizon 2020 Dataset: A Rich Resource for P300 Speller Development

For this project, we'll use the BNCI Horizon 2020 dataset, a publicly available EEG dataset specifically designed for P300 speller research. This dataset offers several advantages:

- Large Sample Size: It includes recordings from a substantial number of participants, providing a diverse range of P300 responses.

- Standardized Paradigm: The dataset follows a standardized experimental protocol, ensuring consistency and comparability across recordings.

- Detailed Metadata: It provides comprehensive metadata, including information about stimulus presentation, participant responses, and electrode locations.

This dataset is well-suited for our P300 speller project because it provides high-quality EEG data recorded during a classic P300 speller paradigm, allowing us to focus on the core signal processing and machine learning steps involved in building a functional speller.

Loading the Data with MNE-Python: Accessing the Brainwave Symphony

To load the BNCI Horizon 2020 dataset using MNE-Python, you'll need to download the data files from the dataset's website (http://bnci-horizon-2020.eu/database/data-sets). Once you have the files, you can use the following code snippet to load a specific participant's data:

import mne

# Set the path to the dataset directory

data_path = '<path_to_dataset_directory>'

# Load the raw EEG data for a specific participant

raw = mne.io.read_raw_gdf(data_path + '/A01T.gdf', preload=True)

Replace <path_to_dataset_directory> with the actual path to the directory where you've stored the dataset files. This code loads the EEG data for participant "A01" during the training session ("T").

2. Data Preprocessing: Refining the EEG Signals for P300 Detection

Raw EEG data is often a mixture of brain signals, artifacts, and noise. Before we can effectively detect the P300 component, we need to clean up the data and isolate the relevant frequencies.

Channel Selection: Focusing on the P300's Neighborhood

The P300 component is typically most prominent over the central-parietal region of the scalp. Therefore, we'll select channels that capture activity from this area. Commonly used channels for P300 detection include:

- Cz: The electrode located at the vertex of the head, directly over the central sulcus.

- Pz: The electrode located over the parietal lobe, slightly posterior to Cz.

- Surrounding Electrodes: Additional electrodes surrounding Cz and Pz, such as CPz, FCz, and P3/P4, can also provide valuable information.

These electrodes are chosen because they tend to be most sensitive to the positive voltage deflection that characterizes the P300 response.

# Select the desired channels

channels = ['Cz', 'Pz', 'CPz', 'FCz', 'P3', 'P4']

# Create a new raw object with only the selected channels

raw_selected = raw.pick_channels(channels)

Filtering: Tuning into the P300 Frequency

The P300 component is a relatively slow brainwave, typically occurring in the frequency range of 0.1 Hz to 10 Hz. Filtering helps us remove unwanted frequencies outside this range, enhancing the signal-to-noise ratio for P300 detection.

We'll apply a band-pass filter to the selected EEG channels, using cutoff frequencies of 0.1 Hz and 10 Hz:

# Apply a band-pass filter from 0.1 Hz to 10 Hz

raw_filtered = raw_selected.filter(l_freq=0.1, h_freq=10)

This filter removes slow drifts (below 0.1 Hz) and high-frequency noise (above 10 Hz), allowing the P300 component to stand out more clearly.

Artifact Removal (Optional): Combating Unwanted Signals

Depending on the quality of the EEG data and the presence of artifacts, we might need to apply additional artifact removal techniques. Independent Component Analysis (ICA) is a powerful method for separating independent sources of activity in EEG recordings. If the BNCI Horizon 2020 dataset contains significant artifacts, we can use ICA to identify and remove components related to eye blinks, muscle activity, or other sources of interference.

3. Epoching and Averaging: Isolating the P300 Response

To capture the brain's response to specific stimuli, we'll create epochs, time-locked segments of EEG data centered around events of interest.

Defining Epochs: Capturing the P300 Time Window

We'll define epochs around both target stimuli (the letters the user is focusing on) and non-target stimuli (all other letters). The epoch time window should capture the P300 response, typically occurring between 300 and 500 milliseconds after the stimulus onset. We'll use a window of -200 ms to 800 ms to include a baseline period and capture the full P300 waveform.

# Define event IDs for target and non-target stimuli (refer to dataset documentation)

event_id = {'target': 1, 'non-target': 0}

# Set the epoch time window

tmin = -0.2 # 200 ms before stimulus onset

tmax = 0.8 # 800 ms after stimulus onset

# Create epochs

epochs = mne.Epochs(raw_filtered, events, event_id, tmin, tmax, baseline=(-0.2, 0), preload=True)

Baseline Correction: Removing Pre-Stimulus Bias

Baseline correction involves subtracting the average activity during the baseline period (-200 ms to 0 ms) from each epoch. This removes any pre-existing bias in the EEG signal, ensuring that the measured response is truly due to the stimulus.

Averaging Evoked Responses: Enhancing the P300 Signal

To enhance the P300 signal and reduce random noise, we'll average the epochs for target and non-target stimuli separately. This averaging process reveals the event-related potential (ERP), a characteristic waveform reflecting the brain's response to the stimulus.

# Average the epochs for target and non-target stimuli

evoked_target = epochs['target'].average()

evoked_non_target = epochs['non-target'].average()

4. Feature Extraction: Quantifying the P300

Selecting Features: Capturing the P300's Signature

The P300 component is characterized by a positive voltage deflection peaking around 300-500 ms after the stimulus onset. We'll select features that capture this signature:

- Peak Amplitude: The maximum amplitude of the P300 component.

- Mean Amplitude: The average amplitude within a specific time window around the P300 peak.

- Latency: The time it takes for the P300 component to reach its peak amplitude.

These features provide a quantitative representation of the P300 response, allowing us to train a classifier to distinguish between target and non-target stimuli.

Extracting Features: From Waveforms to Numbers

We can extract these features from the averaged evoked responses using MNE-Python's functions:

# Extract peak amplitude

peak_amplitude_target = evoked_target.get_data().max(axis=1)

peak_amplitude_non_target = evoked_non_target.get_data().max(axis=1)

# Extract mean amplitude within a time window (e.g., 300 ms to 500 ms)

mean_amplitude_target = evoked_target.crop(tmin=0.3, tmax=0.5).get_data().mean(axis=1)

mean_amplitude_non_target = evoked_non_target.crop(tmin=0.3, tmax=0.5).get_data().mean(axis=1)

# Extract latency of the P300 peak

latency_target = evoked_target.get_peak(tmin=0.3, tmax=0.5)[1]

latency_non_target = evoked_non_target.get_peak(tmin=0.3, tmax=0.5)[1]

5. Classification: Training the Brainwave Decoder

Choosing a Classifier: LDA for P300 Speller Decoding

Linear Discriminant Analysis (LDA) is a suitable classifier for P300 spellers due to its simplicity, efficiency, and ability to handle high-dimensional data. It seeks to find a linear combination of features that best separates the classes (target vs. non-target).

Training the Model: Learning from Brainwaves

We'll train the LDA classifier using the extracted features:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

# Create an LDA object

lda = LinearDiscriminantAnalysis()

# Combine the features into a data matrix

X = np.vstack((peak_amplitude_target, peak_amplitude_non_target,

mean_amplitude_target, mean_amplitude_non_target,

latency_target, latency_non_target)).T

# Create a label vector (1 for target, 0 for non-target)

y = np.concatenate((np.ones(len(peak_amplitude_target)), np.zeros(len(peak_amplitude_non_target))))

# Train the LDA model

lda.fit(X, y)

Feature selection plays a crucial role here. By choosing features that effectively capture the P300 response, we improve the classifier's ability to distinguish between target and non-target stimuli.

6. Visualization: Validating Our Progress

Visualizing Preprocessed Data and P300 Responses

Visualizations help us understand the data and validate our preprocessing steps:

- Plot Averaged Epochs: Use evoked_target.plot() and evoked_non_target.plot() to visualize the average target and non-target epochs, confirming the presence of the P300 component in the target epochs.

- Topographical Plot: Use evoked_target.plot_topomap() to visualize the scalp distribution of the P300 component, ensuring it's most prominent over the expected central-parietal region.

Performance Evaluation: Assessing Speller Accuracy

Now that we've built our P300 speller, it's crucial to evaluate its performance. We need to assess how accurately it can distinguish between target and non-target stimuli, and consider practical factors that might influence its usability in real-world settings.

Cross-Validation: Ensuring Robustness and Generalizability

To obtain a reliable estimate of our speller's performance, we'll use k-fold cross-validation. This technique involves splitting the data into k folds, training the model on k-1 folds, and testing it on the remaining fold. Repeating this process k times, with each fold serving as the test set once, gives us a robust measure of the model's ability to generalize to unseen data.

from sklearn.model_selection import cross_val_score

# Perform 5-fold cross-validation

scores = cross_val_score(lda, X, y, cv=5)

# Print the average accuracy across the folds

print("Average accuracy: %0.2f" % scores.mean())

This code performs 5-fold cross-validation using our trained LDA classifier and prints the average accuracy across the folds.

Metrics for P300 Spellers: Beyond Accuracy

While accuracy is a key metric for P300 spellers, indicating the proportion of correctly classified stimuli, other metrics provide additional insights:

- Information Transfer Rate (ITR): Measures the speed of communication, taking into account the number of possible choices and the accuracy of selection. A higher ITR indicates a faster and more efficient speller.

Practical Considerations: Bridging the Gap to Real-World Use

Several practical factors can influence the performance and usability of P300 spellers:

- User Variability: P300 responses can vary significantly between individuals due to factors like age, attention, and neurological conditions. To address this, personalized calibration is crucial, where the speller is adjusted to each user's unique brain responses. Adaptive algorithms can also be employed to continuously adjust the speller based on the user's performance.

- Fatigue and Attention: Prolonged use can lead to fatigue and decreased attention, affecting P300 responses and speller accuracy. Strategies to mitigate this include incorporating breaks, using engaging stimuli, and employing algorithms that can detect and adapt to changes in user state.

- Training Duration: The amount of training a user receives can impact their proficiency with the speller. Sufficient training is essential for users to learn to control their P300 responses and achieve optimal performance.

Empowering Communication with P300 Spellers

We've successfully built a P300 speller, witnessing firsthand the power of EEG, signal processing, and machine learning to create a functional BCI application. These spellers hold immense potential as a communication tool, enabling individuals with severe motor impairments to express themselves, connect with others, and participate more fully in the world.

Further Reading and Resources

- Review article: Pan J et al. Advances in P300 brain-computer interface spellers: toward paradigm design and performance evaluation. Front Hum Neurosci. 2022 Dec 21;16:1077717. doi: 10.3389/fnhum.2022.1077717. PMID: 36618996; PMCID: PMC9810759.

- Dataset: BNCI Horizon 2020 P300 dataset: http://bnci-horizon-2020.eu/database/data-sets

- Tutorial: PyQt documentation for GUI development (optional): https://doc.qt.io/qtforpython/

Future Directions: Advancing P300 Speller Technology

The field of P300 speller development is constantly evolving. Emerging trends include:

- Deep Learning: Applying deep learning algorithms to improve P300 detection accuracy and robustness.

- Multimodal BCIs: Combining EEG with other brain imaging modalities (e.g., fNIRS) or physiological signals (e.g., eye tracking) to enhance speller performance.

- Hybrid Approaches: Integrating P300 spellers with other BCI paradigms (e.g., motor imagery) to create more flexible and versatile communication systems.

Next Stop: Motor Imagery BCIs

In the next blog post, we'll explore motor imagery BCIs, a fascinating paradigm where users control devices by simply imagining movements. We'll dive into the brain signals associated with motor imagery, learn how to extract features, and build a classifier to decode these intentions.

Welcome back to our BCI crash course! We have journeyed from the fundamentals of BCIs to the intricate world of the brain's electrical activity, mastered the art of signal processing, and equipped ourselves with powerful Python libraries. Now, it's time to unleash the magic of machine learning to decode the secrets hidden within brainwaves. In this blog, we will explore essential machine learning techniques for BCI, focusing on practical implementation using Python and scikit-learn. We will learn how to select relevant features from preprocessed EEG data, train classification models to decode user intent or predict mental states, and evaluate the performance of our BCI models using robust methods.

Feature Selection: Choosing the Right Ingredients for Your BCI Model

Imagine you're a chef preparing a gourmet dish. You wouldn't just throw all the ingredients into a pot without carefully selecting the ones that contribute to the desired flavor profile. Similarly, in machine learning for BCI, feature selection is the art of choosing the most relevant and informative features from our preprocessed EEG data.

Why Feature Selection? Crafting the Perfect EEG Recipe

Feature selection is crucial for several reasons:

- Reducing Dimensionality: Raw EEG data is high-dimensional, containing recordings from multiple electrodes over time. Feature selection reduces this dimensionality, making it easier for machine learning algorithms to learn patterns and avoid getting lost in irrelevant information. Think of this like simplifying a complex recipe to its essential elements.

- Improving Model Performance: By focusing on the most informative features, we can improve the accuracy, speed, and generalization ability of our BCI models. This is like using the highest quality ingredients to enhance the taste of our dish.

- Avoiding Overfitting: Overfitting occurs when a model learns the training data too well, capturing noise and random fluctuations that don't generalize to new data. Feature selection helps prevent overfitting by focusing on the most robust and generalizable patterns. This is like ensuring our recipe works consistently, even with slight variations in ingredients.

Filter Methods: Sifting Through the EEG Signals

Filter methods select features based on their intrinsic characteristics, independent of the chosen machine learning algorithm. Here are two common filter methods:

- Variance Thresholding: Removes features with low variance, assuming they contribute little to classification. For example, in an EEG-based motor imagery BCI, if a feature representing power in a specific frequency band shows very little variation across trials of imagining left or right hand movements, it's likely not informative for distinguishing these intentions. We can use scikit-learn's VarianceThreshold class to eliminate these low-variance features:

from sklearn.feature_selection import VarianceThreshold

# Create a VarianceThreshold object with a threshold of 0.1

selector = VarianceThreshold(threshold=0.1)

# Select features from the EEG data matrix X

X_new = selector.fit_transform(X)

- SelectKBest: Selects the top k features based on statistical tests that measure their relationship with the target variable. For instance, in a P300-based BCI, we might use an ANOVA F-value test to select features that show the most significant difference in activity between target and non-target stimuli. Scikit-learn's SelectKBest class makes this easy:

from sklearn.feature_selection import SelectKBest, f_classif

# Create a SelectKBest object using the ANOVA F-value test and selecting 10 features

selector = SelectKBest(f_classif, k=10)

# Select features from the EEG data matrix X

X_new = selector.fit_transform(X, y)

Wrapper Methods: Testing Feature Subsets

Wrapper methods evaluate different subsets of features by training and evaluating a machine learning model with each subset. This is like experimenting with different ingredient combinations to find the best flavor profile for our dish.

- Recursive Feature Elimination (RFE): Iteratively removes less important features based on the performance of the chosen estimator. For example, in a motor imagery BCI, we might use RFE with a linear SVM classifier to identify the EEG channels and frequency bands that contribute most to distinguishing left and right hand movements. Scikit-learn's RFE class implements this method:

from sklearn.feature_selection import RFE

from sklearn.svm import SVC

# Create an RFE object with a linear SVM classifier and selecting 10 features

selector = RFE(estimator=SVC(kernel='linear'), n_features_to_select=10)

# Select features from the EEG data matrix X

X_new = selector.fit_transform(X, y)

Embedded Methods: Learning Features During Model Training

Embedded methods incorporate feature selection as part of the model training process itself.

- L1 Regularization (LASSO): Adds a penalty term to the model's loss function that encourages sparsity, driving the weights of less important features towards zero. For example, in a BCI for detecting mental workload, LASSO regularization during logistic regression training can help identify the EEG features that most reliably distinguish high and low workload states. Scikit-learn's LogisticRegression class supports L1 regularization:

from sklearn.linear_model import LogisticRegression

# Create a Logistic Regression model with L1 regularization

model = LogisticRegression(penalty='l1', solver='liblinear')

# Train the model on the EEG data (X) and labels (y)

model.fit(X, y)

Practical Considerations: Choosing the Right Tools for the Job

The choice of feature selection method depends on several factors, including the size of the dataset, the type of BCI application, the computational resources available, and the desired balance between accuracy and model complexity. It's often helpful to experiment with different methods and evaluate their performance on your specific data.

Classification Algorithms: Training Your BCI Model to Decode Brain Signals

Now that we've carefully selected the most informative features from our EEG data, it's time to train a classification algorithm that can learn to decode user intent, predict mental states, or control external devices. This is where the magic of machine learning truly comes to life, transforming processed brainwaves into actionable insights.

Loading and Preparing Data: Setting the Stage for Learning

Before we unleash our classification algorithms, let's quickly recap loading our EEG data and preparing it for training:

- Loading the Dataset: For this example, we'll continue working with the MNE sample dataset. If you haven't already loaded it, refer to the previous blog for instructions.

- Feature Extraction: We'll assume you've already extracted relevant features from the EEG data, such as band power in specific frequency bands or time-domain features like peak amplitude and latency.

- Splitting Data: Divide the data into training and testing sets using scikit-learn's train_test_split function:

from sklearn.model_selection import train_test_split

# Split the data into 80% for training and 20% for testing

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

This ensures we have a separate set of data to evaluate the performance of our trained model on unseen examples.

Linear Discriminant Analysis (LDA): Finding the Optimal Projection

Linear Discriminant Analysis (LDA) is a classic linear classification method that seeks to find a projection of the data that maximizes the separation between classes. Think of it like shining a light on our EEG feature space in a way that makes the different classes (e.g., imagining left vs. right hand movements) stand out as distinctly as possible.

Here's how to implement LDA with scikit-learn:

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

# Create an LDA object

lda = LinearDiscriminantAnalysis()

# Train the LDA model on the training data

lda.fit(X_train, y_train)

# Make predictions on the test data

y_pred = lda.predict(X_test)

LDA is often a good starting point for BCI classification due to its simplicity, speed, and ability to handle high-dimensional data.

Support Vector Machines (SVM): Drawing Boundaries in Feature Space

Support Vector Machines (SVM) are powerful classification algorithms that aim to find an optimal hyperplane that separates different classes in the feature space. Imagine drawing a line (or a higher-dimensional plane) that maximally separates data points representing, for example, different mental states.

Here's how to use SVM with scikit-learn:

from sklearn.svm import SVC

# Create an SVM object with a linear kernel

svm = SVC(kernel='linear', C=1)

# Train the SVM model on the training data

svm.fit(X_train, y_train)

# Make predictions on the test data

y_pred = svm.predict(X_test)

SVMs offer flexibility through different kernels, which transform the data into higher-dimensional spaces, allowing for non-linear decision boundaries. Common kernels include:

- Linear Kernel: Suitable for linearly separable data.

- Polynomial Kernel: Creates polynomial decision boundaries.

- Radial Basis Function (RBF) Kernel: Creates smooth, non-linear decision boundaries.

Other Classifiers: Expanding Your BCI Toolbox

Many other classification algorithms can be applied to BCI data, each with its own strengths and weaknesses:

- Logistic Regression: A simple yet effective linear model for binary classification.

- Decision Trees: Tree-based models that create a series of rules to classify data.

- Random Forests: An ensemble method that combines multiple decision trees for improved performance.

Choosing the Right Algorithm: Finding the Perfect Match

The best classification algorithm for your BCI application depends on several factors, including the nature of your data, the complexity of the task, and the desired balance between accuracy, speed, and interpretability. Here's a table comparing some common algorithms: